AI, ML, ChatOps, and MLOps: a Real Understanding of Artificial Intelligence

Table of Contents

OpenAI on OpenShift - This article is part of a series.

In this post, we’ll deep dive into some foundational concepts in AI and Machine Learning, such as Large Language Models (LLMs), Model Servers, Model Scaling, AI Workload Scaling, and more. We’ll also explore the concepts of MLOps and ChatOps. Our discussion will frequently reference OpenAI’s GPT-4, Kubeflow, and other OpenShift tools to illustrate these concepts in a real-world context.

Introduction #

AI, or Artificial Intelligence, is an umbrella term that encompasses various subfields, one of which is Machine Learning (ML). Machine Learning is a method of data analysis that automates analytical model building. It uses algorithms that iteratively learn from data, allowing computers to find hidden insights without being explicitly programmed where to look.

Large Language Models (LLMs) #

Large Language Models like OpenAI’s GPT-4 ( ChatGPT) are a type of AI that use machine learning to generate human-like text. They are trained on a diverse range of internet text, allowing them to generate coherent and contextually relevant sentences by predicting the likelihood of a word given the previous words used in the text.

Training an LLM involves providing it with a large corpus of text data. The model learns to understand the context and semantics of language by predicting the next word in a sentence. The ‘serving’ or deployment of these models involves integrating them into applications to generate text, answer queries, write essays, and much more.

MLOps and ChatOps #

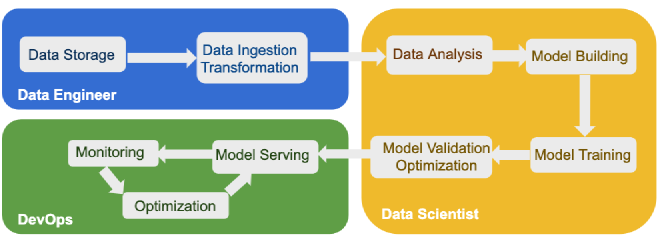

MLOps, or Machine Learning Operations, is a practice for collaboration and communication between data scientists and operations professionals to help manage production ML (or deep learning) lifecycle. It seeks to increase automation and improve the quality of production ML while also focusing on business and regulatory requirements.

ChatOps, on the other hand, is a model that integrates work-related conversations with technical processes and tools into a single unified platform. It allows for the execution of scripts and automation that include a bot in the conversation loop alongside team members.

Model Servers and Model Scaling #

Model servers are frameworks designed to host machine learning models and serve predictions in real-time. They handle tasks like load balancing, model versioning, metrics tracking, and more. Examples include the Seldon Core, which we’ve used in several other posts in this series to serve the OpenAI LLM on OpenShift.

Model scaling is about managing and adjusting the computational resources allocated to a model to match the demand. This involves both ‘scaling up’ (adding more resources to a single node) and ‘scaling out’ (adding more nodes to the system).

AI Workload Scaling #

AI workload scaling is a broader concept that includes model scaling. It also involves the scaling of data ingestion, preprocessing, and analysis operations, often across distributed systems. Kubernetes and OpenShift, along with tools like Kubeflow, provide robust support for AI workload scaling.

Putting the Concepts into Practice #

Deep Dive with OpenAI, ChatGPT, and OpenShift #

OpenAI’s GPT-4, a state-of-the-art LLM recently made famous by ChatGPT, exemplifies many of these concepts. It’s trained on a large corpus of text data and can generate impressively human-like text. It’s a perfect example of a model that needs robust model serving and scaling strategies due to its large size and resource requirements.

Kubeflow, a machine learning toolkit for Kubernetes, is a powerful tool for managing the lifecycle and workloads of such models. It provides functionalities for training ML models, serving them via servers like Seldon Core, and scaling them to meet demand.

By integrating Kubeflow with OpenShift, you can leverage the powerful capabilities of both platforms to manage your ML workloads effectively. OpenShift’s robust container orchestration capabilities, combined with Kubeflow’s ML-specific functionality, offer a comprehensive solution for MLOps.

Conclusion #

Understanding AI, ML, MLOps, ChatOps, LLMs, and concepts related to model and AI workload scaling are key to navigating the landscape of modern AI and ML applications. Tools like OpenShift and Kubeflow offer robust solutions for managing these complex systems, from training large language models like GPT-4 to serving and scaling them efficiently.

References #

- What Are LLMs and Why Are They Important? | NVIDIA Blog

- Hugging Face – The AI community building the future. - The leading community for open source development around AI.

- Kaggle - Probably THE most popular site for free Data Science datasets.

- Kubeflow Documentation

- OpenShift Documentation

- Seldon Core Documentation

- Introduction to MLOps

- Collaborate by using ChatOps - IBM Garage Practices