Leveraging Tekton for AI Workloads on OpenShift

Table of Contents

AI/ML OCP Tooling - This article is part of a series.

Synopsis #

In this post, we delve into the application of Tekton, a powerful Kubernetes-native continuous integration and continuous delivery (CI/CD) solution, for managing AI workloads on an OpenShift cluster. We’ll discuss the advantages of Tekton for AI/ML workflows and provide detailed examples and configurations.

Introduction #

Tekton introduces a cloud-native mechanism to define and run CI/CD pipelines, making it a fitting tool for managing AI/ML workloads. From automating model training to deploying models into production, Tekton can streamline your AI/ML workflows on OpenShift.

Tekton Overview #

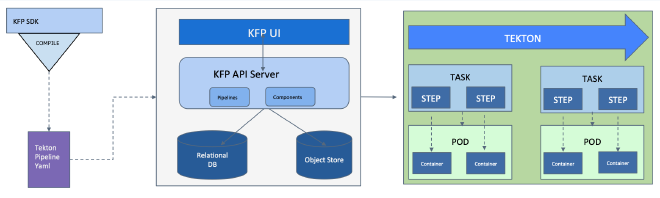

Tekton provides Kubernetes custom resources to define pipelines, tasks, and runs. A Task is a collection of steps that perform a specific job, like training a model or deploying an application. A Pipeline groups multiple tasks together, and a PipelineRun is an execution of a pipeline.

Tekton for AI/ML Workflows #

Let’s examine how Tekton can streamline AI/ML workflows on OpenShift, with specific examples.

1. Automating Model Training #

You can define a Tekton task to automate the model training process. This task might include steps to pull the training data from a data source, run the training job, and save the trained model to a model registry. Here’s an example configuration:

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: train-model

spec:

steps:

- name: pull-data

image: <your-data-image>

script: |

# Pull data from your data source

- name: train

image: <your-training-image>

script: |

# Run training job

- name: save-model

image: <your-model-image>

script: |

# Save model to model registry

2. Deploying Models #

A Tekton task can also handle model deployment. This task might include steps to pull the trained model from the model registry, create a SeldonDeployment or a KFServing InferenceService to serve the model, and expose a service to access the model.

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: deploy-model

spec:

steps:

- name: pull-model

image: <your-model-image>

script: |

# Pull model from model registry

- name: deploy

image: <your-deployment-image>

script: |

# Deploy model using Seldon or KFServing

- name: expose-service

image: <your-service-image>

script: |

# Expose service to access the model

3. CI/CD Pipeline for AI/ML #

You can orchestrate these tasks into a CI/CD pipeline to automate the entire AI/ML workflow. The pipeline can be triggered by events such as a new data arrival or a code update, enabling continuous integration and deployment of your AI/ML workloads.

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: ai-pipeline

spec:

tasks:

- name: train-model

taskRef:

name: train-model

- name:

deploy-model

taskRef:

name: deploy-model

runAfter:

- train-model

Conclusion #

Tekton presents a powerful tool for managing AI workloads in an OpenShift cluster. By automating and streamlining AI/ML workflows, Tekton ensures faster, more reliable, and repeatable processes. This ultimately leads to a more efficient and agile machine learning lifecycle.

References #

- OpenShift Documentation

- Tekton Documentation

- Getting started with Tasks | Tekton

- Kubeflow Pipelines with Tekton

- Tekton Pipeline Examples

- Open Data Hub and Tekton