Leveraging NVIDIA GPUs in OpenShift Virtualization for ML Model Training

Table of Contents

Virtually Containers - This article is part of a series.

Introduction #

Hello, tech enthusiasts! Nick Miethe here from MeatyBytes.io. In our previous post, we explored OpenShift Virtualization in detail. Today, we’re taking it a step further by discussing how to deploy virtual machines (VMs) in OpenShift Virtualization with NVIDIA GPUs enabled.

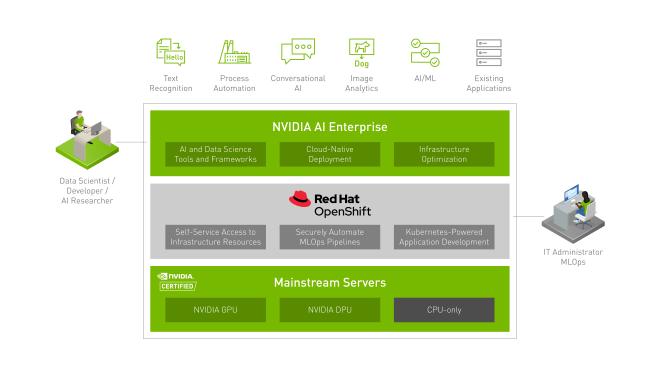

This setup is particularly useful for machine learning (ML) model training, graphics display, and other GPU-intensive tasks. If you haven’t already, I recommend you check out our AI/ML and OpenAI on OCP series as well. So, let’s dive in!

Hardware Requirements #

To enable NVIDIA GPUs in OpenShift Virtualization, you’ll need the following hardware:

- A server with one or more NVIDIA GPUs installed. The specific GPU model will depend on your workload requirements.

- A CPU that supports virtualization. This is necessary for running VMs in OpenShift.

GPU Passthrough Technology #

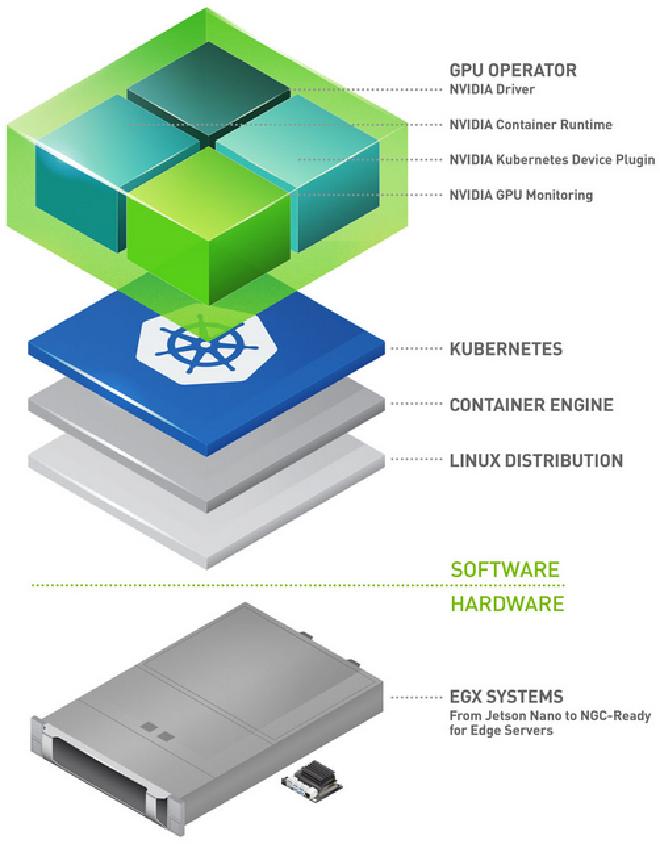

The technology that allows GPU passthrough to KubeVirt VMs is the Kubernetes Device Plugin framework. This framework allows resources like GPUs to be managed and allocated to Pods and VMs in a Kubernetes cluster.

For NVIDIA GPUs, the NVIDIA Kubernetes Device Plugin is used. This plugin discovers the GPUs on each node in the cluster and advertises them to the Kubernetes scheduler. When a Pod or VM is scheduled to run on a node, the scheduler can allocate one or more GPUs to it.

Deploying VMs with NVIDIA GPUs #

Now, let’s look at how to deploy a VM with NVIDIA GPUs in OpenShift Virtualization.

Step 1: Install the NVIDIA GPU Operator #

The NVIDIA GPU Operator manages the GPU resources in your OpenShift cluster. To install it, you can use the OperatorHub in the OpenShift web console:

- Navigate to the OperatorHub and search for “NVIDIA GPU Operator”.

- Click on the NVIDIA GPU Operator and then click “Install”.

- Follow the prompts to complete the installation.

Step 2: Enable GPU Passthrough #

Next, you need to enable GPU passthrough for KubeVirt. This can be done by creating a KubeVirt custom resource with the spec.configuration.developerConfiguration.featureGates field set to GPU:

apiVersion: kubevirt.io/v1

kind: KubeVirt

metadata:

name: kubevirt

namespace: kubevirt

spec:

configuration:

developerConfiguration:

featureGates:

- GPU

Step 3: Create a VM with a GPU #

Finally, you can create a VM with a GPU. In the VM’s YAML definition, you need to add a gpus field under spec.domain.devices and specify the number of GPUs and the GPU vendor:

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: meatyvm

spec:

running: false

template:

metadata:

labels:

kubevirt.io/domain: meatyvm

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: containerdisk

gpus:

- deviceName: nvidia.com/GPU

name: gpu1

resources:

requests:

memory: 1G

volumes:

- name: containerdisk

containerDisk:

image: kubevirt/cirros-container-disk-demo

- name: gpu1

hostDisk:

path: "/dev/nvidia0"

type: Disk

This configuration will allocate one NVIDIA GPU to the VM.

Conclusion #

Leveraging NVIDIA GPUs in OpenShift Virtualization opens up a world of possibilities for GPU-intensive tasks like ML model training. By following the steps outlined in this post, you can set up your own GPU-enabled VMs in no time.

Remember, the specific hardware and GPU model you choose will depend on your workload requirements. So, take the time to evaluate your needs carefully. Also, this example provisions an entire GPU to a single VM. To split up a supported GPU and use its vGPUs requires quite a bit of additional configuration. Look out for a post on this in the future, and in the meantime see below for a RH blog post addressing the issue.

That’s all for now, folks!

References #

- OpenShift Virtualization Documentation

- Enabling vGPU in a Single Node using OpenShift Virtualization

- NVIDIA GPU Operator

- KubeVirt Documentation

- Powering GeForce NOW with KubeVirt, from OpenShift Commons Gathering, Amsterdam 2023

- NVIDIA GPU Operator: Simplifying GPU Management in Kubernetes | NVIDIA Technical Blog